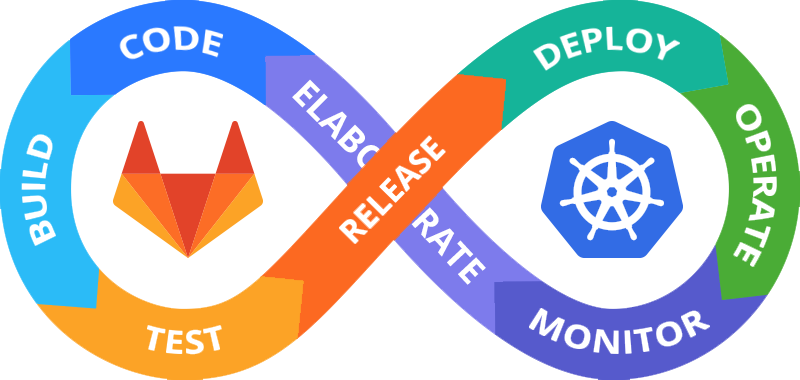

In my recent project, I took the initiative to establish a Continuous Integration/Continuous Delivery (CI/CD) pipeline using GitLab Community Edition, which I deployed onto a cloud server. My goal was to create a seamless, automated workflow that would ensure the robustness and reliability of our codebase at every stage of development.

I began by selecting a reputable cloud service provider that offered the scalability and uptime essential for a CI/CD environment. After provisioning the server, I installed and configured GitLab CE along with its dependencies like PostgreSQL for the database management and Redis for caching, optimizing the performance of the pipeline.

The core of the CI/CD pipeline was defined in the .gitlab-ci.yml configuration file. I meticulously crafted this file to set up multiple stages—building the code, running automated tests, and deploying to a test environment. Each commit to a feature branch initiated this pipeline, automatically compiling the code to catch syntax errors early and then deploying it to a test server that mirrored our production environment.

Automated tests played a critical role; I integrated various types of tests such as unit, integration, and functional tests to ensure code quality. This setup allowed us to maintain a high standard for our main branch, as code was merged only after passing all tests and reviews. I also took advantage of the cloud server’s capabilities for automated backups and scalability, ensuring the infrastructure could handle the CI/CD operations efficiently.

By implementing this pipeline, I fostered a culture of regular and early integration, which significantly reduced integration problems and led to more reliable software builds. It also freed the development team to focus on creating innovative features rather than getting bogged down with repetitive testing and integration tasks.

This project not only improved our deployment frequency but also enhanced the overall quality of our code. It’s a testament to my commitment to adopting best practices in software development and my ability to leverage modern cloud-based solutions to drive productivity and quality.

I began by selecting a reputable cloud service provider that offered the scalability and uptime essential for a CI/CD environment. After provisioning the server, I installed and configured GitLab CE along with its dependencies like PostgreSQL for the database management and Redis for caching, optimizing the performance of the pipeline.

The core of the CI/CD pipeline was defined in the .gitlab-ci.yml configuration file. I meticulously crafted this file to set up multiple stages—building the code, running automated tests, and deploying to a test environment. Each commit to a feature branch initiated this pipeline, automatically compiling the code to catch syntax errors early and then deploying it to a test server that mirrored our production environment.

Automated tests played a critical role; I integrated various types of tests such as unit, integration, and functional tests to ensure code quality. This setup allowed us to maintain a high standard for our main branch, as code was merged only after passing all tests and reviews. I also took advantage of the cloud server’s capabilities for automated backups and scalability, ensuring the infrastructure could handle the CI/CD operations efficiently.

By implementing this pipeline, I fostered a culture of regular and early integration, which significantly reduced integration problems and led to more reliable software builds. It also freed the development team to focus on creating innovative features rather than getting bogged down with repetitive testing and integration tasks.

This project not only improved our deployment frequency but also enhanced the overall quality of our code. It’s a testament to my commitment to adopting best practices in software development and my ability to leverage modern cloud-based solutions to drive productivity and quality.